Projects#

Active Projects#

Realizing Sociotechnical Machine Learning through Modeling, Explanations, and Reflections.

This project will build on prior work in the lab to take a human in the loop type approach to understanding how to make socially safer technical components in sociotechncial systems (people+technology interacting).

We will look at how model-based approaches and explanation techniques help develop sociotechnical foresight, the ability of technologists to anticipate the social impacts of their work through examining their reflections on their proceses and their work.

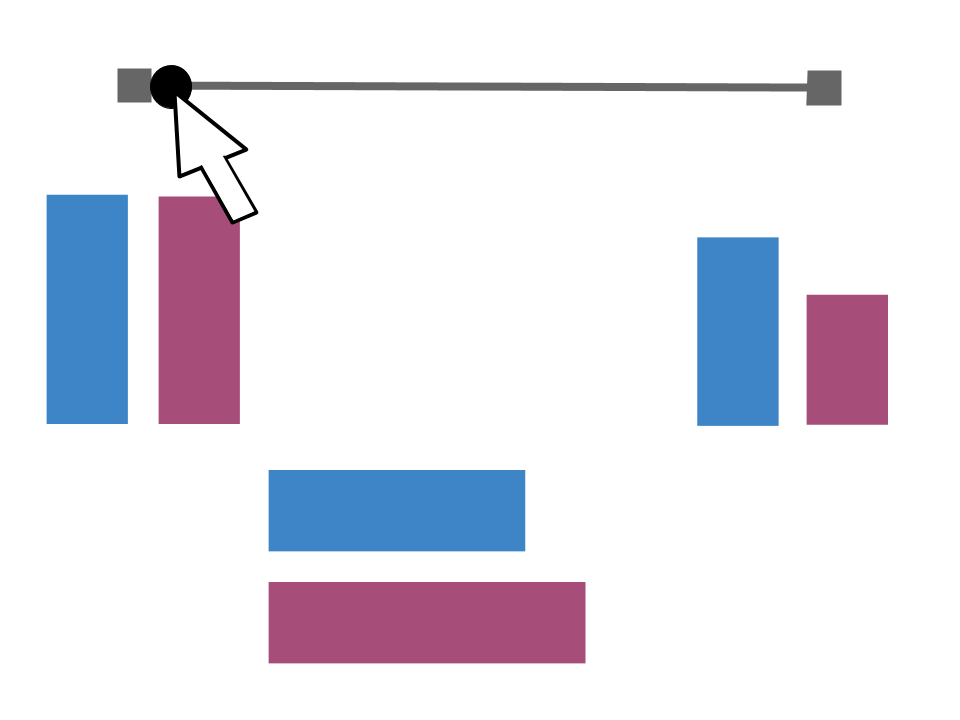

Model-Based Fairness Intervention Assessment

In this project, we are using bias models to evalute the effectiveness of different types of fair machine learning interventions. We hope to use the insights from this to provide data scientists with more actionable advice on how to select a fairness intervention.

LLMs and Fair Data Driven Decision-making

We are buliding a benchmark to evaluate benchmarks on making non-discriminatory decisions from data. This will include evaluating direct decisions, assisting on fair ML tasks, and agentically training fair models.

Perceptions of AI Fairness

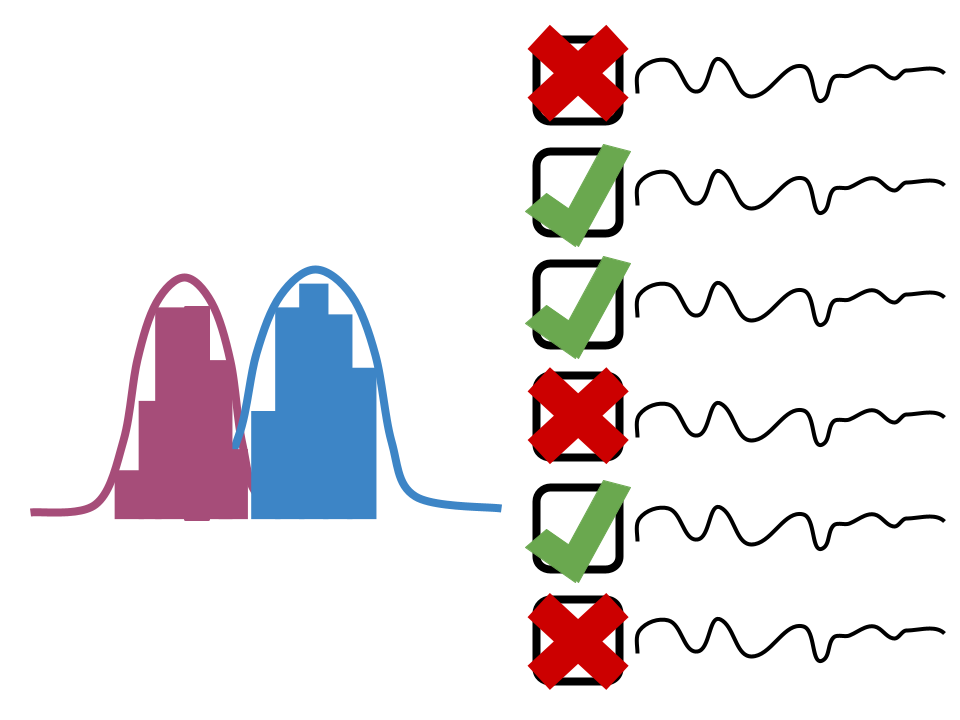

In collaboration with Malik Boykin’s lab in Cognitive, Linguistic, and Psychological Sciences at Brown, we are studying people’s preferred definition of fairness and what social and algorithmic factors influence these preferences. To power these tools, we are also developing techniques to interpolate between definitions of fairness.

Task Level Fairness

In this project, we examine how fairness can be evaluated at the task and problem level in order to develop heuristics for the feasibility of a fair model prior to training.

This project will produce a Python library that anyone can use in the EDA step of their project.

Past Projects

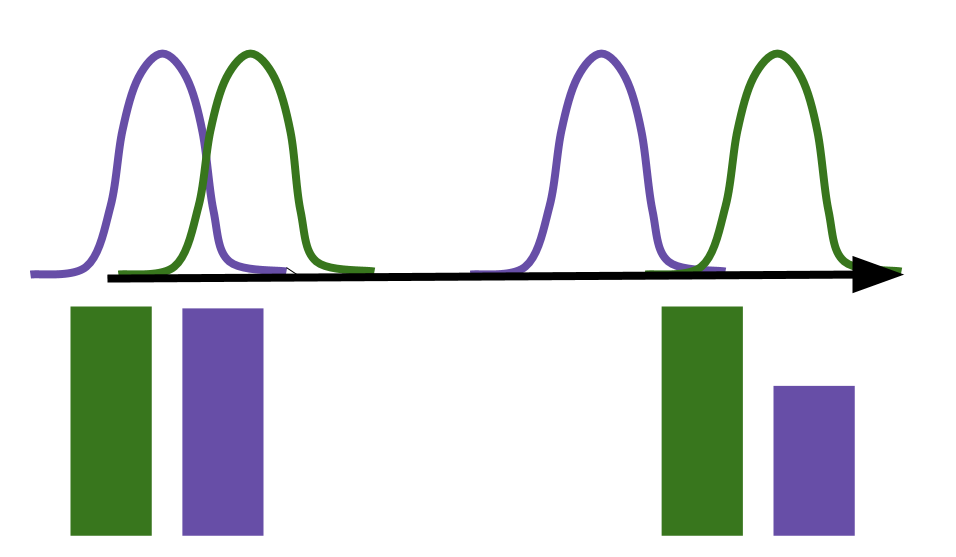

Dynamics of Fairness

(inactive, seeking a student)

How does fairness generalize, tolerate distribution shift, and propagate through interacting systems?

- img-top

_static/img/fairness_forensics.png

Wiggum

Simpson’s paradox inspired Fairness Forensics in collaboration with the OU Data Lab